AI Reasoning: Are We Expecting Too Much?

Recent research on artificial intelligence reasoning models has sparked debate about their capabilities. One paper suggests these models cannot reason, while another indicates they think like humans in certain aspects. This raises the question: Are our expectations for AI realistic, or should we accept their limitations?

AI Models and Human-Like Conceptualization

A research paper explored how large language models classify images, comparing them to human conceptualization. The study involved presenting both AI and humans with sets of three images and asking them to identify the odd one out.

-

The findings revealed that AI models develop conceptual representations of objects similar to humans.

-

Furthermore, the study found a strong alignment between activity patterns in the model network and neural activity patterns in the human brain.

-

This indicates that object representations in large language models share fundamental similarities with human conceptual knowledge, although not identical.

This suggests that current AI models are learning to think like humans, at least to some extent.

The Apple Paper and the Limits of Reasoning Models

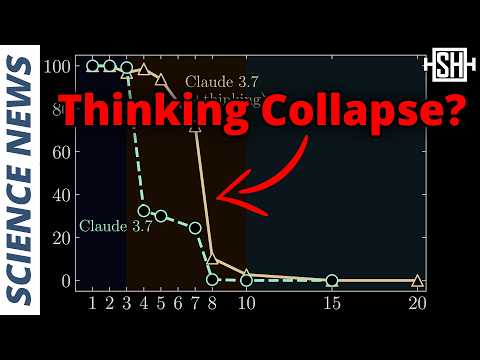

A headline-making paper from Apple examined the performance of large reasoning models as problems increase in complexity. These models, essentially beefed-up versions of large language models with "chain of thought" capabilities, break down prompts into smaller steps for analysis.

-

The study revealed that these "frontier large reasoning models" experience a "complete accuracy collapse" beyond a certain level of complexity.

-

However, a subsequent paper argued this collapse might be due to limitations in the number of tokens (text length) the models can output, rather than a fundamental inability to reason.

Despite the debate surrounding the Apple paper, its initial reception led many to believe that current AI models cannot think like humans.

Defining Reasoning and Thinking

The ability to execute an algorithm or classify images might be an insufficient measure for reasoning or thinking, in general. Many people might not know how to run specific algorithms. Does this mean they can't reason?

The current AI models demonstrate some capacity for thinking and reasoning, but it is limited and lacks generalizability. The models are gradually improving with more effort and training. However, they haven't developed hallmarks of human intelligence, such as deductive and inductive analysis, abstract thinking, or quick learning.

The Future of AI and the Pursuit of Human-Level Intelligence

While current AI models can execute algorithms, this is not groundbreaking. The core issue is that the current approach may not be the path to achieving human-level intelligence (AGI). It remains to be seen when companies heavily invested in these models will acknowledge the limitations and potential "accuracy collapse" of the idea of imminent AGI.

The Importance of Internet Security

Artificial intelligence is becoming increasingly prevalent, including in coding. This could soon create major security risks for Internet browsing. Therefore, it is important to use tools to protect your online activity. A virtual private network (VPN) creates a secure connection for your internet browsing. A VPN hides your IP address and encrypts your data, preventing others from spying on your data or tracking your location. VPNs can also be used to bypass geographically restricted content.

{#

{#  {#

{#  {#

{#  {#

{#